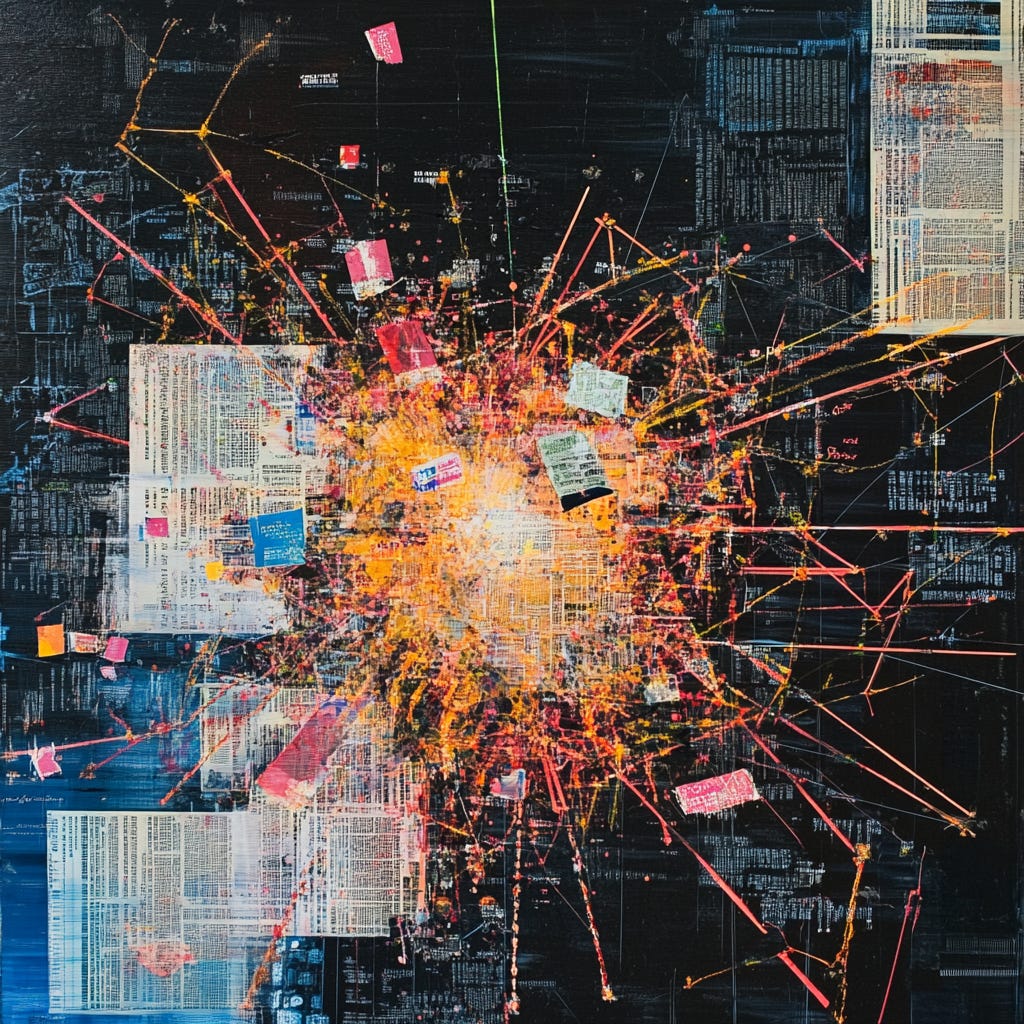

The Architecture of Deception: Mapping the Evolution of Strategic Disinformation in Modern Politics

The transformation of information warfare from a supplementary tactic to a central strategic pillar of political power represents one of the most significant developments in modern governance. While propaganda and misinformation have existed throughout history, the sophisticated architecture of deception that characterizes contemporary political discourse represents a fundamental shift in both scale and methodology.

The origins of modern disinformation strategy can be traced to several pivotal developments in the mid-20th century. The emergence of mass media, particularly television, created new opportunities for information control and narrative manipulation. However, it was the Soviet Union's sophisticated development of "active measures" that laid the groundwork for contemporary disinformation strategies. These operations went beyond simple propaganda, seeking to create alternative reality frameworks that could fundamentally reshape public perception of political and social events.

The end of the Cold War, rather than diminishing these practices, led to their evolution and adoption by a wider range of actors. The democratization of information technology, particularly the internet, created new vectors for disinformation that proved particularly effective in open societies. The key innovation during this period was the recognition that absolute control of information was less effective than creating an environment of perpetual uncertainty and competing narratives.

The emergence of social media platforms represented a quantum leap in the potential for strategic disinformation. These platforms provided unprecedented opportunities for micro-targeting specific demographics, rapid dissemination of tailored narratives, and the creation of self-reinforcing information bubbles. Perhaps most significantly, they enabled the automation of disinformation through sophisticated bot networks and algorithmic content manipulation.

The role of private sector actors in this evolution cannot be overstated. While state intelligence agencies pioneered many disinformation techniques, private companies, particularly in the data analytics and social media sectors, developed sophisticated tools for behavior modification and opinion shaping that eventually surpassed state capabilities. The Cambridge Analytica scandal revealed only the surface of a deep ecosystem of private sector psychological manipulation tools.

Most intriguingly, the development of artificial intelligence and machine learning technologies has created new frontiers in disinformation strategy. These technologies enable the creation of increasingly sophisticated deep fakes, automated content generation, and predictive modeling of information dissemination patterns. The ability to create and distribute convincing false narratives at scale represents a fundamental challenge to traditional concepts of political discourse and democratic decision-making.

The methodologies employed in modern disinformation campaigns reveal sophisticated understanding of cognitive psychology and social dynamics. Rather than simply promoting false information, contemporary strategies focus on creating entire ecosystems of uncertainty that undermine the very concept of objective truth. This approach, often referred to as "computational propaganda," combines traditional psychological manipulation with advanced data analytics and artificial intelligence.

A key innovation in modern disinformation strategy involves the concept of "flooding the zone" - overwhelming information channels with multiple, often contradictory narratives. This technique proves particularly effective because it exploits cognitive limitations in processing complex information. When faced with multiple competing narratives, individuals often default to selecting explanations that confirm existing biases rather than investing energy in determining objective truth. This phenomenon has been systematically exploited by various actors, from state intelligence agencies to corporate influence operations.

The role of technological platforms in amplifying disinformation merits particular attention. Social media algorithms, designed to maximize engagement, inadvertently create perfect conditions for the spread of emotionally charged false information. These systems tend to promote content that generates strong emotional responses, regardless of accuracy. The monetization of attention has created economic incentives that actively reward the creation and distribution of misleading content.

Private sector actors have emerged as crucial players in the disinformation ecosystem. Companies specializing in "strategic communication" and "reputation management" have developed sophisticated tools for manipulating public opinion that rival or exceed state capabilities. These private sector innovations often find their way into state-sponsored disinformation campaigns, creating a complex web of public-private partnerships in the manipulation of information flows.

The emergence of automated disinformation systems represents a particularly significant development. Machine learning algorithms can now generate convincing fake news articles, social media posts, and even video content at scale. These systems can analyze vast amounts of user data to create tailored disinformation campaigns targeted at specific demographic groups or psychological profiles. The ability to automate both content creation and distribution has dramatically increased the potential scale of disinformation operations.

The role of legitimate media in amplifying disinformation, often unwittingly, presents another crucial aspect of modern information warfare. Traditional media outlets, under pressure to maintain relevance in a rapidly changing information environment, frequently find themselves serving as vectors for strategic disinformation. The practice of reporting on disinformation campaigns, even critically, often serves to further distribute the underlying narratives.

The implications of sophisticated disinformation systems for democratic societies present fundamental challenges to traditional concepts of political discourse and decision-making. The ability to systematically manipulate public perception through coordinated disinformation campaigns threatens the basic assumptions underlying democratic governance. The erosion of shared reality frameworks makes meaningful political dialogue increasingly difficult, if not impossible.

Perhaps most troublingly, the emergence of "reality marketplaces" where competing narratives vie for acceptance represents a fundamental shift in how societies process information. Rather than evaluating information based on evidence and logical consistency, individuals increasingly "shop" for realities that conform to their existing beliefs and preferences. This phenomenon has been systematically exploited by various actors to create parallel information universes that serve specific political or economic interests.

The role of artificial intelligence in future disinformation operations presents particularly concerning possibilities. As language models and content generation systems become more sophisticated, the ability to create convincing false narratives at scale will increase dramatically. The potential emergence of fully automated disinformation ecosystems, capable of generating and adapting content in real-time response to changing circumstances, could overwhelm traditional fact-checking and verification mechanisms.

Counter-measures to modern disinformation present complex challenges. Technical solutions, such as improved content moderation algorithms or blockchain-based verification systems, often prove inadequate against sophisticated psychological manipulation techniques. Educational approaches, focusing on media literacy and critical thinking, while valuable, struggle to keep pace with evolving disinformation methodologies. The fundamental challenge lies in the asymmetry between the resources required to create and distribute false information versus those needed to verify and correct it.

The potential emergence of epistemic security as a crucial domain of national defense merits serious consideration. Just as nations maintain military forces to protect physical sovereignty, the protection of information spaces may require dedicated resources and strategies. However, the development of such capabilities raises serious questions about the balance between security and freedom of expression.

Looking forward, several potential trajectories emerge. One possibility involves the continued fragmentation of shared reality frameworks, leading to increasingly parallel societies operating under different sets of assumed facts. Another scenario might see the emergence of new social technologies for establishing and maintaining consensus reality in the face of systematic manipulation attempts. The resolution of these competing possibilities will likely shape the future of political organization and social cooperation.

The most crucial insight may be recognizing that traditional concepts of truth and objective reality face fundamental challenges in an era of sophisticated information warfare. The ability to maintain functional democratic systems may require developing new frameworks for establishing shared understanding that can resist systematic manipulation while preserving essential freedoms. This challenge represents one of the most significant obstacles facing contemporary societies.

The future of political discourse and democratic decision-making will likely depend on the development of new social technologies capable of establishing and maintaining shared reality frameworks in the face of sophisticated manipulation attempts. Whether such technologies emerge from technical innovation, social organization, or some combination of both remains to be seen. What seems clear is that the current trajectory of information warfare presents existential challenges to traditional concepts of democratic governance and social cooperation.