Epistemic Infrastructure: Building Shared Truth in an Era of Disaggregation

The Construct of Collective Perception

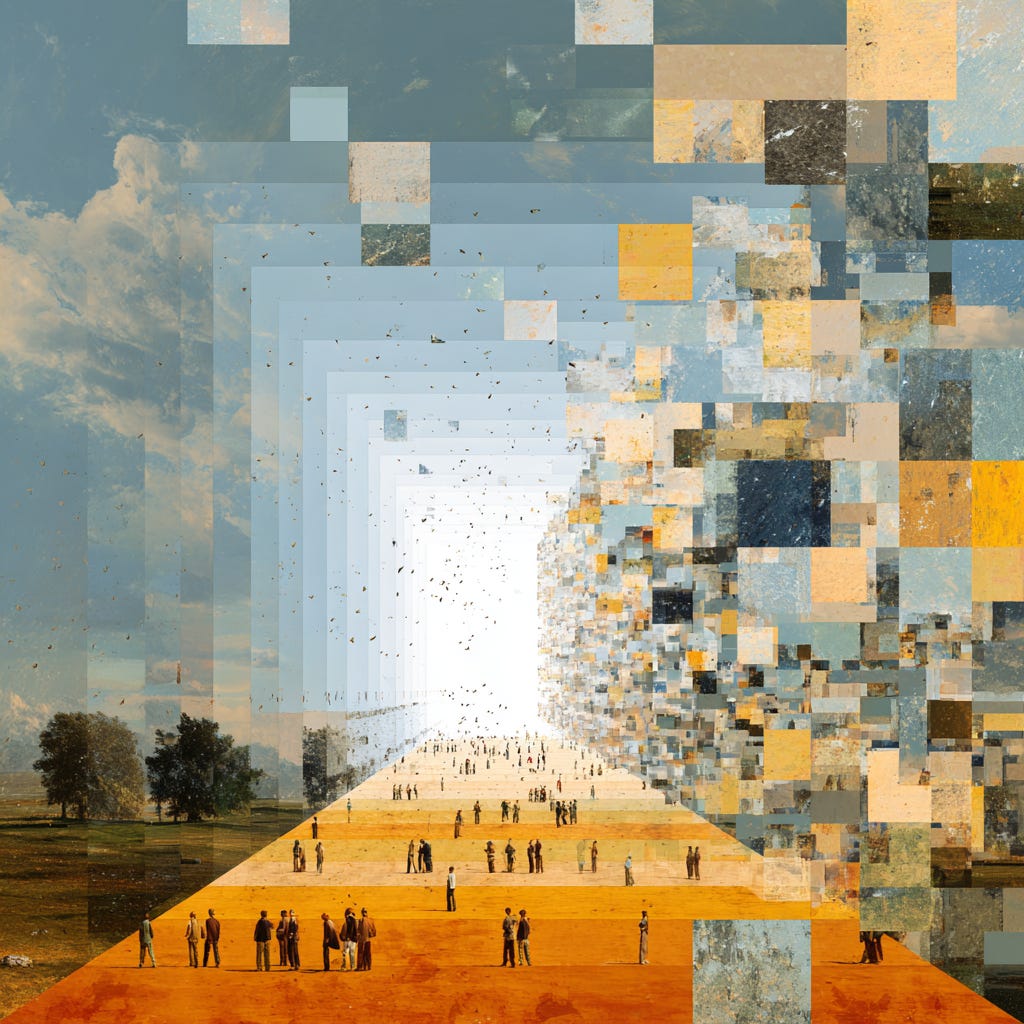

Every functioning society operates on a foundation invisible to most of its inhabitants: a shared system for determining what counts as true, what merits attention, and what can be dismissed as noise or fabrication. This infrastructure for collective sense-making has historically been so taken for granted that it rarely received explicit recognition until it began to fail. Now, as that failure accelerates, we confront an uncomfortable reality: the mechanisms through which societies construct shared understanding have become unstable, contested, and increasingly inadequate to the informational environment they must navigate.

The concept of epistemic infrastructure refers to the institutions, practices, and technologies that mediate between raw information and collective knowledge. These systems determine which claims receive credibility, which sources deserve trust, and how disagreements about facts get resolved. They include obvious elements like newspapers, scientific journals, and educational curricula, but also subtler components like the norms governing expertise, the procedures for fact-checking, and the social sanctions against deliberate falsehood.

For most of the twentieth century, this infrastructure remained relatively stable in developed democracies. A manageable number of institutional gatekeepers controlled access to mass audiences. Professional standards governed journalism, scholarship, and public discourse. Educational systems transmitted broadly similar versions of history, science, and civic knowledge. The architecture was never perfect or fully trusted by everyone, but it functioned adequately enough that citizens could navigate daily life with reasonable confidence about basic factual questions.

This functional adequacy has collapsed with remarkable speed. The transformation began with technological changes that disrupted traditional information gatekeepers, but it has metastasized into something far more profound: a crisis in the social capacity to distinguish truth from fabrication, expertise from performance, and knowledge from mere assertion. The crisis manifests not just in polarized politics or conspiracy theories but in the erosion of any shared basis for resolving factual disputes or building collective understanding.

The Twentieth Century Consensus

Understanding the current crisis requires examining what preceded it. The mid-twentieth century epistemic infrastructure in the United States and similar societies rested on several interlocking components that together created something approximating a shared reality across diverse populations.

Mass media organizations operated as the primary mechanism for determining what information reached public attention. Three television networks, a handful of national newspapers, and local broadcast stations controlled most of what Americans saw and heard about events beyond their direct experience. This oligopoly exercised enormous power over the public agenda, deciding which stories merited coverage and how those stories should be framed. The concentration of power troubled critics who worried about manipulation and bias, but it also enabled a degree of coordination that seems impossible in retrospect.

These media organizations operated according to professional norms that constrained how they exercised their gatekeeping authority. Journalists distinguished news from opinion, verified claims before publication, and sought to present multiple perspectives on contested issues. The standards were imperfect and often violated, particularly regarding coverage of marginalized communities and foreign affairs. But the existence of standards created accountability mechanisms and established baselines for acceptable practice.

Educational institutions reinforced this system by transmitting similar knowledge and interpretive frameworks across the population. History textbooks presented broadly consistent narratives about national development. Science curricula taught common understandings of natural phenomena. Civics classes explained governmental structures and democratic processes using shared conceptual vocabularies. Regional and ideological variations existed, but they operated within parameters narrow enough that citizens from different backgrounds could communicate about public issues using mutually comprehensible references.

Scientific and academic institutions provided additional credentialing and validation functions. Peer review filtered research before publication. Universities certified expertise through degree requirements and professional advancement criteria. Professional associations established ethical standards and disciplinary procedures. These mechanisms concentrated epistemic authority in credentialed experts whose pronouncements carried special weight in public discourse.

Religious institutions occupied a more complicated position, providing meaning and moral frameworks while generally accepting limits on their authority over factual claims about the natural world. The accommodation between religious and secular epistemologies proved fragile and contested, but it held well enough that disputes about evolution or cosmology remained contained rather than exploding into total epistemological warfare.

This architecture created what might retrospectively be recognized as the high-water mark of shared factual consensus in American society. Opinion remained deeply divided on values, policies, and political choices, but citizens across these divides could generally agree on basic facts about events, scientific findings, and historical occurrences. The consensus was never complete, never uncontested, and never fully inclusive of perspectives from marginalized groups. But it existed as a functional social reality that enabled democratic deliberation and collective decision-making.

The Digital Disruption

The internet promised to democratize access to information and break the stranglehold of traditional gatekeepers. In this vision, anyone could publish ideas, citizens could access diverse sources directly, and the marketplace of ideas would function more efficiently by reducing transaction costs for information exchange. The technology would enhance rather than undermine collective truth-seeking by expanding participation and exposing previously hidden information.

The reality has proven far more complex and considerably darker. The same technologies that enabled wider participation also demolished the economic models supporting professional journalism, fragmented attention across countless sources, and created new opportunities for deliberate deception. The gatekeepers fell, but no functional replacement emerged to perform the coordination and validation roles they had provided.

The economic foundation supporting journalism collapsed as advertising revenue migrated to digital platforms that employed journalists producing original reporting but rather aggregated and redistributed content created by others. Newspapers shuttered bureaus, reduced staff, and struggled to find sustainable business models. Local journalism suffered particularly severe damage, leaving many communities without dedicated coverage of municipal government, schools, or civic institutions. The loss extended beyond specific news organizations to the broader ecosystem of accountability journalism that monitored powerful institutions and surfaced information that those institutions preferred to keep hidden.

Social media platforms filled part of the void left by declining traditional media, but they operated according to fundamentally different logic. Rather than editors making decisions about what information deserved attention based on professional judgments about newsworthiness and public interest, algorithmic systems optimized for engagement distributed content based on what generated clicks, shares, and sustained attention. The incentive structures favored emotional resonance over accuracy, confirmation of existing beliefs over challenging new information, and simple narratives over complex reality.

These platforms also enabled new forms of information manipulation that traditional media gatekeepers had made difficult if not impossible. Coordinated campaigns could amplify messages through bot networks and fake accounts, creating illusions of grassroots support. Disinformation could spread faster than corrections, reaching audiences before fact-checks appeared. Deep fakes and manipulated media could circulate widely before being identified as fabrications. The technological capacity to produce and distribute misleading information expanded dramatically while the social and institutional mechanisms for containing such information atrophied.

The Fragmentation of Attention

Perhaps more consequential than the decline of traditional gatekeepers has been the fragmentation of attention across countless sources and platforms. Where Americans in 1970 might have chosen among three television networks and one or two local newspapers, their counterparts in 2025 can access thousands of websites, apps, podcasts, and video channels, each catering to specific interests, ideologies, and aesthetic preferences.

This abundance creates what researchers have identified as filter bubbles and echo chambers, where individuals increasingly consume information that confirms rather than challenges their existing beliefs. The effect operates partly through user choice and partly through algorithmic curation that learns and reinforces preferences. Someone seeking information about climate change might encounter authoritative scientific consensus or elaborate conspiracy theories depending on their previous engagement patterns and social network connections.

The fragmentation extends beyond simple political polarization to affect the basic categories through which people interpret reality. Different information ecosystems provide not just different interpretations of shared facts but entirely different factual landscapes. An event might be treated as the most important story of the day in one information ecosystem while remaining completely invisible in another. Historical claims that seem obviously false to one audience appear as suppressed truth to another. Scientific findings accepted as settled by credentialed experts face rejection as fraudulent by communities trusting alternative sources.

This disaggregation undermines the possibility of productive disagreement. When opposing sides in a political dispute not only interpret facts differently but operate with incompatible factual premises, debate becomes impossible. Arguments consist of rival assertions backed by sources the other side considers illegitimate. The lack of common ground prevents not just agreement but even the mutual comprehension required for substantive engagement.

The educational system’s capacity to counteract this fragmentation has diminished as well. Students arrive in classrooms having been exposed to radically different information environments. Teachers attempting to establish common factual baselines face accusations of indoctrination from parents whose information sources have primed them to view educational institutions as adversaries. The curriculum itself becomes contested terrain where disputes about what children should learn reflect deeper disagreements about what counts as knowledge worth transmitting.

The Crisis of Expertise

The erosion of epistemic infrastructure has produced a particular crisis in the social standing of expertise. The twentieth century consensus granted credentialed experts special authority to make pronouncements about matters within their domains. Scientists spoke authoritatively about natural phenomena, economists about market behavior, physicians about health, and engineers about technological systems. This authority was never absolute or unquestioned, but it carried sufficient weight that expert consensus constrained the boundaries of acceptable opinion on factual questions.

This deference has collapsed with stunning rapidity. The collapse reflects multiple causes, some justified by genuine failings of expert institutions and others manufactured through deliberate campaigns to undermine credible authority. Experts have made high-profile errors, particularly regarding economic policy, foreign affairs, and public health. Professional consensus has sometimes lagged behind emerging evidence. The close relationships between credentialed experts and powerful interests have created reasonable suspicions about whose interests expert pronouncements actually serve.

But the loss of faith in expertise extends far beyond these legitimate criticisms to a more fundamental rejection of the entire concept that specialized knowledge and training confer any special insight. Online communities develop elaborate alternative theories about medicine, climate science, engineering, and history, treating professional expertise not as one voice among many but as active disinformation propagated by corrupt institutions. The distinction between informed judgment and mere opinion dissolves, replaced by a radical epistemological egalitarianism where everyone’s assessment counts equally regardless of relevant knowledge or training.

This development has proven particularly consequential during public health emergencies, where rejection of medical expertise has translated into vaccine refusal, promotion of unproven treatments, and resistance to public health measures. The consequences extend beyond individual health risks to collective action problems where managing contagion requires coordinated behavior based on shared understanding of epidemiological reality.

The crisis of expertise connects to broader anxieties about social hierarchy and democratic legitimacy. The claim that credentialed experts deserve special epistemic authority can appear as an assertion of class privilege, particularly when expert institutions remain demographically unrepresentative and geographically concentrated in coastal urban areas. Populist political movements have exploited these resentments, framing disputes about factual questions as conflicts between ordinary people’s common sense and corrupt elite manipulation.

The Attention Economy and Epistemic Pollution

The transformation of information distribution through digital platforms has created an attention economy where capturing and maintaining user engagement determines success. This economic logic has produced an environment of what can be understood as epistemic pollution: a situation where the informational commons become so saturated with misleading, false, and manipulative content that distinguishing signal from noise becomes prohibitively difficult even for well-intentioned actors.

The pollution operates through multiple mechanisms. Clickbait headlines and sensationalized framing attract attention at the cost of accuracy. Algorithmic amplification rewards emotional resonance over reliability. Coordinated manipulation campaigns inject fabricated claims into circulation faster than fact-checking organizations can identify and debunk them. The sheer volume of content overwhelms individual capacity to verify claims or trace sources.

Commercial incentives drive much of this pollution. Content farms generate articles optimized for search algorithms regardless of factual accuracy. Social media influencers discover that outrage and conspiracy theories generate engagement that translates into revenue. Advertising-supported business models create pressures to maximize pageviews and time spent consuming content without regard for the quality or truthfulness of what gets consumed.

Political actors have learned to exploit this polluted environment for strategic advantage. Deliberately introducing false information into circulation serves multiple purposes: it confuses opponents, energizes supporters, and degrades the overall information environment in ways that advantage those willing to abandon concern for truth. The tactic succeeds not by convincing everyone of specific falsehoods but by creating sufficient doubt about truth itself that citizens give up trying to distinguish fact from fabrication.

The pollution also operates at a meta-level by undermining trust in the institutions and processes that might otherwise help filter information. Accusations of bias, corruption, or incompetence against fact-checkers, journalists, and academic researchers make citizens less willing to accept their authority. When every source appears potentially compromised, individuals fall back on tribal affiliation and ideological priors rather than investing effort in genuine evaluation.

This polluted epistemic environment poses challenges that extend far beyond politics to affect every domain requiring shared factual understanding. Financial markets depend on reliable information about company performance and economic conditions. Public health requires accurate knowledge about disease transmission and treatment effectiveness. Environmental policy needs trustworthy data about ecological systems. When the information infrastructure becomes too polluted to support confident knowledge in any of these domains, collective decision-making degrades across all of them simultaneously.

The Mechanics of Disaggregation

The collapse of shared epistemic infrastructure has not produced chaos randomly distributed across society. Instead, disaggregation has followed predictable patterns shaped by technological affordances, economic incentives, and social psychology. Understanding these patterns reveals how the fragmentation of collective truth-making operates as a system rather than merely an accumulation of individual failures.

Algorithmic Curation and the Personalization of Reality

The recommendation algorithms deployed by major platforms have become the primary mechanism through which most people encounter information about the world beyond their immediate experience. These systems optimize for engagement metrics rather than epistemic quality, creating feedback loops that progressively narrow and distort the information environments that users inhabit.

The process operates subtly but powerfully. A user watches a video about a political controversy. The platform’s algorithm notes that this video kept the user’s attention and recommends similar content. The user watches another video from a related creator, confirming to the algorithm that this category of content successfully retains engagement. Over time, the recommendation system learns to surface increasingly specific variants of content that match the user’s demonstrated preferences.

This personalization creates several distinct epistemic problems. First, it progressively filters out information that might challenge or complicate the user’s existing framework. A person who initially watches content skeptical of climate science will receive recommendations for more climate skepticism, rarely encountering the scientific consensus or rebuttals to skeptical arguments. The algorithm has no stake in whether users encounter comprehensive information or develop accurate understanding of complex issues. Its function is retention, and retention correlates more strongly with confirmation than with challenge.

Second, the algorithmic curation creates what researchers call availability cascades, where certain narratives gain momentum through repeated exposure rather than through their epistemic validity. A claim that appears repeatedly in recommended content begins to seem credible through sheer familiarity, regardless of its relationship to reality. The psychological principle that repeated exposure increases acceptance combines with algorithmic amplification to spread dubious claims through communities faster than corrections can circulate.

Third, the platforms create perverse incentives for content creators who learn to optimize for algorithmic success rather than accuracy or nuance. Creators discover that sensationalism, emotional manipulation, and ideological certainty generate more engagement than measured analysis or acknowledgment of uncertainty. The economic rewards for producing low-quality content that performs well algorithmically exceed the rewards for producing high-quality content that serves genuine informational needs.

The result is millions of personalized information environments, each tuned to maintain engagement by delivering content that confirms the user’s existing beliefs and emotional dispositions. These environments are not randomly distributed but cluster around certain attractors related to political ideology, cultural identity, and aesthetic preferences. The clusters develop their own internal consistency, complete with shared narratives, trusted sources, and interpretive frameworks that make sense within that information ecosystem while appearing incomprehensible or obviously false from outside it.

The Economics of Attention and Epistemic Quality

The business models supporting digital platforms create systematic incentives against epistemic quality. Advertising-supported media depends on capturing and retaining attention, which correlates poorly with the attributes that make information valuable for collective decision-making. Accurate, nuanced analysis of complex issues typically attracts smaller audiences than sensationalized misrepresentation. Careful fact-checking and revision slow publication in ways that competitive pressures punish. Investment in investigative journalism produces uncertain returns compared to aggregating and repackaging content created by others.

This economic logic has produced a landscape where epistemic pollution becomes rational business strategy. Content farms employ low-wage workers or automated systems to generate articles optimized for search algorithms, creating vast quantities of text that appears informative while containing errors, distortions, or complete fabrications. Social media influencers discover that conspiracy theories and outrage-bait generate engagement that translates into revenue through advertising, subscriptions, and merchandise sales. Even legacy media organizations face pressure to compromise standards in pursuit of traffic and relevance.

The platforms themselves have grown into the most valuable companies in history while accepting minimal responsibility for the epistemic quality of content they distribute. Their business model depends on network effects and data accumulation that create winner-take-most dynamics, but these same features concentrate enormous power over information distribution without corresponding accountability for how that power gets exercised. The platforms claim to be neutral infrastructure while making countless decisions that shape what information circulates and what remains buried.

This economic structure creates asymmetries between truth and falsehood that favor the latter in competitive information environments. Fabricating compelling lies requires less effort than investigating complex reality. Sensationalism attracts more attention than nuance. Confirmation of existing beliefs spreads faster than information that requires updating mental models. The marketplace of ideas, rather than selecting for truth through competition, often selects for whatever generates engagement regardless of accuracy.

The destruction of local journalism exemplifies these dynamics. Newspapers that once employed reporters to cover municipal government, school boards, and community institutions have shuttered or shrunk to skeletal operations. The journalism they produced had limited commercial value relative to its cost, but it provided essential informational infrastructure for local governance and civic life. Without that coverage, citizens lack access to information about how local institutions function, creating accountability deficits that enable corruption and mismanagement.

National and international journalism face different but related pressures. The collapse of traditional business models has forced news organizations to compete for digital attention in environments where they face competition from entertainment, social media, and content optimized purely for engagement. Some organizations have found success through subscription models, but these typically serve affluent audiences already committed to consuming news. Broad segments of the population increasingly receive no exposure to professional journalism at all, instead consuming information from sources with no professional standards or accountability mechanisms.

The Production of Alternative Realities

The fragmented information landscape has enabled the construction of alternative realities complete with their own histories, scientific theories, and interpretations of current events. These alternatives are not merely different perspectives on shared facts but comprehensive worldviews grounded in incompatible factual premises.

Consider how different information ecosystems have processed the COVID-19 pandemic. One ecosystem, centered on public health institutions and mainstream media, treated the pandemic as a genuine public health emergency requiring coordinated response based on epidemiological evidence. Another ecosystem treated the pandemic as exaggerated or fabricated, with public health measures representing authoritarian overreach rather than legitimate disease control. These are not different interpretations of the same events but fundamentally incompatible understandings of what actually happened.

The construction of alternative realities depends on several enabling conditions. First, the fragmented media environment allows communities to form around shared rejection of mainstream sources. Members reinforce each other’s skepticism of establishment institutions while developing trust in alternative sources that confirm their suspicions. The social dynamics of in-group formation strengthen commitment to alternative frameworks, making them progressively more resistant to contrary evidence.

Second, the availability of vast information archives online enables motivated reasoning at previously impossible scales. Whatever claim someone wishes to believe can find supporting evidence somewhere on the internet. The existence of supporting evidence, regardless of quality or context, provides psychological comfort that beliefs have rational justification. The ease of finding confirming information makes it unnecessary to grapple with disconfirming evidence or to develop sophisticated methods for evaluating source credibility.

Third, the platforms connecting these communities create feedback loops where alternative realities become self-reinforcing. Content that supports the community’s shared framework gets amplified through sharing and engagement. Content that challenges it gets ignored or actively rejected. Over time, the community develops its own canon of trusted sources, key claims, and interpretive lenses that make their alternative reality feel comprehensive and internally coherent.

These alternative realities prove remarkably resilient to correction. When mainstream sources debunk specific claims, adherents interpret the debunking as evidence of cover-up or bias rather than legitimate correction. The alternative reality includes meta-level claims about why establishment sources cannot be trusted, making it effectively unfalsifiable. Attempts at persuasion through evidence and argument often backfire, strengthening commitment to the alternative framework by providing opportunities to practice defensive arguments and reinforce group solidarity.

The phenomenon extends beyond marginal conspiracy theories to affect understanding of major historical and scientific questions. Climate science, evolutionary biology, economic history, and the causes of various social problems all exist in versions that differ radically across information ecosystems. These differences matter enormously for collective decision-making because they lead to incommensurable policy preferences grounded in incompatible understandings of reality.

The Weaponization of Disagreement

Sophisticated political actors have learned to exploit epistemic fragmentation for strategic advantage. The techniques go beyond traditional propaganda to include deliberate degradation of the information environment itself, creating confusion and doubt that serve specific political purposes even without establishing particular false beliefs.

The approach involves flooding the information space with conflicting claims, making it difficult for citizens to determine what actually happened or which sources deserve trust. When every side accuses the others of lying and presents apparently supporting evidence, many people give up trying to determine truth and fall back on tribal affiliation or cynical assumption that everyone lies. This epistemic exhaustion benefits actors who prefer that citizens remain confused and disengaged rather than informed and mobilized.

Accusations of bias and partisanship against fact-checkers and neutral arbiters serve similar strategic purposes. By successfully tarring institutions that might help citizens navigate disagreements as themselves partisan actors, political operatives eliminate potential mechanisms for resolving factual disputes. When fact-checkers lose credibility, there exists no neutral ground on which to adjudicate competing claims. Disagreements become pure tests of political strength rather than questions susceptible to evidence and argument.

The strategic manipulation extends to exploiting the professional norms of legitimate journalism in ways that spread misinformation. Political figures make false claims that receive coverage because of the speaker’s prominence. The resulting news story includes the false claim in headlines and opening paragraphs, with corrections and context appearing later where fewer readers encounter them. The false claim circulates more widely than the correction, achieving the speaker’s goal of establishing a narrative regardless of its truth value.

Social media manipulation has become increasingly sophisticated, employing networks of fake accounts, coordinated posting strategies, and exploitation of platform algorithms to amplify desired messages and suppress unwanted ones. State actors and well-funded political operations employ these techniques to shape public discourse, creating illusions of grassroots sentiment or consensus where none actually exists. The manipulation proves difficult to detect and counter because it mimics organic behavior while operating at scales and with coordination impossible for genuine grassroots movements.

These tactics succeed partly because they exploit epistemic commons problems. Individual citizens lack the time, resources, and expertise to verify claims independently. They must rely on institutional mechanisms for filtering and validating information. When those mechanisms are deliberately degraded, citizens lose the capacity to distinguish truth from fabrication without personally investigating every claim. The cost of this investigation exceeds what most people can bear, leading to either withdrawal from information consumption or uncritical acceptance of sources that feel trustworthy for social or psychological reasons.

The Institutional Response and Its Failures

Established institutions have attempted various responses to epistemic disaggregation, but these efforts have largely failed to restore functional shared reality. The failures reflect both genuine difficulties in addressing the underlying problems and strategic mistakes that have sometimes worsened the situation.

Fact-checking organizations proliferated in response to growing concerns about misinformation, but their impact has proven limited. Fact-checks often reach smaller audiences than the false claims they address. The delay between a claim’s circulation and its debunking allows the claim to establish itself before correction appears. Perhaps most significantly, fact-checks frequently encounter the backfire effect, where correcting someone’s false belief strengthens rather than weakens their commitment to that belief.

The platforms themselves have implemented various content moderation policies, attempting to limit the spread of particularly harmful misinformation while avoiding accusations of censorship. These policies have satisfied almost no one. Critics from one direction argue that moderation represents unacceptable interference with free expression. Critics from the other direction contend that moderation remains inadequate to address the scale of misinformation and manipulation occurring on the platforms. The platforms themselves struggle to develop and enforce policies consistently across billions of pieces of content in dozens of languages addressing countless topics.

Educational initiatives to promote media literacy and critical thinking skills face their own challenges. Teaching people to question sources and verify claims sounds beneficial, but it risks reinforcing cynicism that treats all sources as equally suspect. Without providing reliable tools for actually determining what sources deserve trust, media literacy education may simply make people better at defending whatever beliefs they already hold. The initiatives also struggle with scale, reaching only small fractions of the population while requiring sustained effort and attention that many people cannot or will not provide.

Legislative and regulatory responses have mostly focused on platform accountability and transparency, but enforcement remains weak and the political will to impose meaningful constraints on powerful technology companies has proven elusive. Proposals to require algorithmic transparency, limit targeted advertising, or impose liability for harmful content face fierce lobbying resistance and difficult questions about implementation. The global nature of digital platforms complicates regulatory efforts as companies can forum-shop for favorable jurisdictions or route operations through locations with minimal oversight.

Academic research has documented the problem extensively, producing detailed studies of how misinformation spreads, how algorithms shape information consumption, and how epistemic polarization affects political behavior. This research has improved understanding but has not translated into effective interventions. The knowledge remains largely confined to specialist communities rather than informing practical responses at scale.

The Feedback Loop of Degradation

Perhaps most troubling is how epistemic disaggregation creates feedback loops that accelerate its own progression. As shared reality fragments, the tools and institutions that might restore it lose effectiveness. This degradation compounds over time, making each successive stage more difficult to reverse than the last.

The process operates through several mechanisms. As people sort into distinct information ecosystems, they lose the common reference points needed for productive disagreement. Arguments become increasingly futile as participants lack shared premises from which to reason. This futility breeds cynicism about the possibility of truth itself, creating permission to abandon epistemic standards entirely. Why worry about accuracy when determining truth seems impossible and everyone appears to be lying?

The sorting also degrades interpersonal trust networks. People discover that friends, family members, and colleagues inhabit fundamentally different informational realities. These discoveries strain relationships and make people more cautious about discussing contentious topics. The withdrawal from difficult conversations reduces opportunities for the kind of patient mutual education that might bridge epistemic gaps. Communities become less diverse in their information consumption as people self-segregate to avoid conflict.

Institutional credibility suffers cumulative damage that proves difficult to repair. Each real or perceived failure of mainstream institutions provides ammunition for critics who claim these institutions cannot be trusted. The criticism may be fair or unfair, justified by genuine problems or manufactured through strategic manipulation, but the effect accumulates regardless. Institutions that lose credibility cannot easily regain it simply by improving performance, because the audiences who have lost faith no longer attend to evidence of improvement.

The economic incentives driving epistemic pollution strengthen as the environment degrades. In a clean information environment, producing garbage carries reputational costs that discourage low-quality content. In an already polluted environment, these costs disappear as audiences lose capacity to distinguish quality. The marginal reputational benefit of maintaining standards diminishes while the marginal economic benefit of abandoning standards increases. This creates race-to-the-bottom dynamics where everyone faces pressure to match the lowest common denominator.

Political polarization and epistemic disaggregation reinforce each other in particularly vicious feedback loops. Political identity becomes tied to acceptance of specific factual claims, making those claims immune to evidence-based correction. Disagreement about facts becomes coded as political opposition, making people unwilling to concede factual points even when evidence clearly favors the other side. The politicization of factual questions makes those questions harder to resolve through neutral mechanisms, which further entrenches the polarization.

The result is a system that appears to lack stable equilibria other than complete fragmentation. Each component of the epistemic infrastructure depends on others functioning adequately. When multiple components fail simultaneously, restoration becomes exceptionally difficult because repairing any single component requires the others to already be working. The collective action problems involved in rebuilding shared epistemic infrastructure may prove even harder to solve than building it initially, because restoration requires coordination across communities that no longer trust each other or agree on basic premises about how to determine truth.

Reconstruction and the Future of Shared Reality

The disaggregation of epistemic infrastructure poses an existential challenge to democratic governance, market efficiency, and social cohesion. Addressing this challenge requires moving beyond diagnosis to consider what reconstruction might entail. The task is not simply restoring previous arrangements, which were themselves flawed and whose vulnerabilities the current crisis has exposed. Instead, reconstruction demands designing new institutional and technological architectures capable of supporting shared reality in conditions fundamentally different from those that prevailed during the twentieth century.

The Preconditions for Shared Truth

Any functional epistemic infrastructure must satisfy several requirements simultaneously. It must provide mechanisms for validating claims that citizens across diverse perspectives can accept as legitimate. It must create incentives for producing high-quality information that serves genuine informational needs rather than merely capturing attention. It must enable productive disagreement by establishing common factual grounds while allowing genuine disputes about values and priorities. And it must prove resilient to deliberate manipulation by actors seeking to degrade the information environment for strategic advantage.

These requirements create tensions that cannot be fully resolved, only managed through acceptable tradeoffs. Validation mechanisms powerful enough to filter misinformation effectively risk becoming tools for censorship or establishment control. Strong incentives for quality information production may require concentrating resources in ways that limit diversity of voices and perspectives. Common factual grounds depend on deference to expertise that some view as illegitimate hierarchy. Resilience to manipulation often requires restrictions on speech and activity that conflict with liberal values about expression.

The twentieth century epistemic infrastructure managed these tensions through particular institutional arrangements that no longer function in current conditions. The question is whether new arrangements can achieve similar functionality without recreating the specific structures whose collapse created the current crisis. The answer likely involves multiple parallel efforts addressing different aspects of the problem rather than a single comprehensive solution.

Redesigning Information Platforms

The platforms mediating most information consumption must operate according to different logic than pure engagement optimization. This requires changing both their business models and their algorithmic systems in ways that align platform incentives with epistemic quality rather than mere attention capture.

Several approaches deserve serious consideration. Public interest algorithms could optimize for outcomes beyond engagement, incorporating measures of accuracy, source credibility, and informational value into content distribution. These systems would require developing metrics for epistemic quality that could be applied at scale, a technical challenge complicated by the need to avoid creating new vectors for manipulation or censorship. The metrics would need to prove robust against gaming by content creators seeking to optimize for visibility regardless of quality.

Chronological feeds and user-controlled filtering represent an alternative approach, returning agency to individuals rather than delegating curation to opaque algorithmic systems. This solution appeals to those prioritizing user autonomy but risks recreating the information overload problems that algorithmic curation was originally deployed to address. Without some filtering mechanism, users face impossible choices about how to allocate attention across vast quantities of available content.

Interoperability requirements could reduce platform lock-in and enable competition on epistemic quality rather than network effects. If users could easily move their social connections and content across platforms, they could choose services based on information environment quality rather than remaining trapped on platforms whose epistemic standards they find inadequate. The technical standards for such interoperability would require careful design to prevent race-to-the-bottom dynamics where platforms compete by offering the least restrictive content policies.

Advertising model alternatives including subscriptions, public funding, or micropayments could reduce dependence on attention-capture business models. Each approach presents distinct challenges. Subscription models risk creating epistemic stratification where affluent populations access quality information while others remain in advertising-supported environments optimized for engagement. Public funding raises governance questions about maintaining independence from political pressure. Micropayment systems face adoption hurdles and create friction in information access.

Platform governance reforms could distribute power more broadly through user councils, independent oversight boards, or multi-stakeholder governance structures. These mechanisms might make platforms more accountable to public interest considerations rather than purely private shareholder value. However, governance reform faces fierce resistance from platform companies and difficult questions about how to represent diverse user interests across global populations with incompatible values and priorities.

None of these approaches provides a complete solution in isolation, and each creates new problems while addressing existing ones. The optimal configuration likely involves combinations of mechanisms tailored to different contexts rather than universal prescriptions applied across all platforms and use cases. Experimentation remains necessary, but current regulatory frameworks and market concentration make experimentation difficult.

Rebuilding Institutional Credibility

Restoring functional epistemic infrastructure requires rebuilding trust in institutions capable of validating information and adjudicating factual disputes. This proves exceptionally difficult because trust, once lost, cannot be simply commanded back into existence through improved performance or better communication. Reconstruction requires both genuine reform of institutional practices and patient work to demonstrate that reform through sustained reliable operation.

Journalism institutions must develop sustainable business models that support quality reporting while remaining accessible to broad populations. The current trajectory toward subscriptions serving affluent audiences leaves large segments of society without access to professional journalism, creating dangerous information deserts. Solutions might include philanthropic support, public funding models adapted from European public broadcasting, or cooperative ownership structures that align institutional incentives with informational rather than commercial imperatives.

The journalism produced must also better serve diverse audiences and address historical failures in coverage of marginalized communities and perspectives. This requires not just adding diversity to newsrooms, though that remains important, but rethinking standard practices about sourcing, framing, and news judgment that have systematically privileged certain voices and viewpoints. The reform must occur without abandoning professional standards that distinguish journalism from propaganda or abandoning the commitment to factual accuracy that distinguishes news from entertainment.

Scientific institutions face their own credibility challenges stemming from real failures, strategic attacks, and the inherent difficulty of communicating complex and uncertain findings to lay audiences. Rebuilding trust requires several simultaneous efforts: improving research integrity and addressing conflicts of interest that have undermined confidence, developing better methods for communicating scientific findings that acknowledge uncertainty without abandoning authority, and creating more accessible pathways for public engagement with scientific process.

Educational institutions must find ways to transmit common foundational knowledge while respecting legitimate disagreements about values and interpretation. This requires distinguishing between factual questions that have clear evidence-based answers and normative questions where reasonable people can disagree. The distinction is not always sharp, but maintaining it remains essential for enabling education to provide shared reference points without becoming indoctrination.

Professional associations and credentialing bodies need to enforce standards more consistently while making their processes more transparent and accountable. When expertise loses credibility partly because credentialed experts have committed highly visible failures or conflicts of interest, restoration requires demonstrating that professional communities can effectively police themselves and hold members accountable for violations of epistemic standards.

Creating New Validation Mechanisms

Beyond reforming existing institutions, reconstruction may require developing new mechanisms for validating information that can function in fragmented information environments. These mechanisms must prove credible across diverse communities while remaining resistant to manipulation and capture.

Distributed fact-checking networks could enable validation through consensus across ideologically diverse evaluators rather than relying on centralized authorities. The approach faces challenges in recruiting participants, ensuring good-faith engagement, and developing protocols that produce reliable results rather than merely reflecting majority opinion. But distributed validation may prove more resilient to accusations of bias than centralized fact-checking while enabling scaling beyond what traditional fact-checking organizations can accomplish.

Transparency and provenance tracking for information could help users assess credibility by understanding where claims originate and how they have circulated. Technologies including cryptographic signing, blockchain-based verification, and provenance metadata could make it harder to launder misinformation through citation chains that obscure original dubious sources. However, these technical solutions face adoption challenges and risks creating new complexities that most users cannot navigate.

Prediction markets and forecasting platforms could provide alternative validation mechanisms for empirical claims about future events. These systems aggregate information from diverse participants with different perspectives and incentivize accuracy through financial or reputational stakes. While unsuitable for many types of factual questions, prediction markets offer valuable tools for assessing probabilistic claims where traditional validation mechanisms struggle.

Community notes and collaborative annotation systems enable users to collectively add context and correction to circulating content. Twitter’s Community Notes feature has demonstrated some promise in this regard, though questions remain about scaling, gaming resistance, and ideological balance. The approach deserves further experimentation and refinement as one tool among many for improving information quality.

Red teaming and adversarial testing could help identify weaknesses in information systems before those weaknesses get exploited for manipulation. Deliberately attempting to break validation mechanisms, fool algorithms, or spread sophisticated misinformation in controlled settings provides valuable information about vulnerabilities that need addressing. This requires resources and expertise that most institutions currently lack but that seem essential for building robust epistemic infrastructure.

Addressing Structural Asymmetries

Reconstruction must confront fundamental asymmetries between truth and falsehood that advantage misinformation in competitive information environments. Truth is often complex, uncertain, and emotionally unsatisfying. Lies can be simple, confident, and emotionally resonant. Investigating reality requires time and resources. Fabricating compelling falsehoods requires less of both. These asymmetries create persistent challenges that cannot be fully eliminated but must be counteracted through deliberate institutional design.

One approach involves reducing the advantages that speed provides to misinformation. Social media platforms could implement friction in information sharing, requiring users to pause and verify before spreading claims. Mandatory delays between encountering information and sharing it could interrupt the impulse-driven spreading that advantages emotionally resonant misinformation. The friction must be carefully calibrated to reduce harm while preserving the genuine benefits of rapid information circulation during emergencies or breaking news.

Another strategy targets the economic advantages of producing low-quality content. Algorithmic demotion of known unreliable sources, advertising restrictions preventing monetization of misinformation, and platform policies reducing distribution of content from repeat offenders could make producing epistemic pollution less profitable. These interventions require careful implementation to avoid creating new problems through overreach or inconsistent enforcement.

Educational interventions must address not just media literacy but the psychological and social factors that make people vulnerable to misinformation. Teaching emotional regulation, providing tools for managing uncertainty, and building resilience against manipulation tactics may prove more valuable than teaching people to fact-check individual claims. This education needs to begin early and continue throughout life rather than consisting of episodic interventions.

Social and community-level interventions could help restore the interpersonal trust networks that once served as informal validation mechanisms. When people trust their neighbors and maintain relationships across ideological divides, they prove more resistant to misinformation because they have access to diverse perspectives and trusted sources of correction. Rebuilding these networks requires addressing the social atomization and political polarization that have eroded them, challenges that extend well beyond epistemic infrastructure into broader questions of social cohesion.

The Role of Regulation and Governance

Government regulation of information platforms and media systems remains contentious, with legitimate concerns about censorship and government overreach colliding with equally legitimate concerns about market failures and platform power. Finding appropriate regulatory frameworks requires balancing multiple values including free expression, epistemic quality, market efficiency, and democratic accountability.

Transparency requirements represent a relatively uncontroversial starting point. Platforms could be required to disclose their algorithmic ranking factors, content moderation policies, and advertising systems in ways that enable independent auditing without revealing trade secrets or creating new vectors for manipulation. This transparency would help users make informed choices about platform use and provide regulators with information needed for assessing whether platforms serve public interest.

Interoperability mandates could reduce platform monopoly power and enable competition on service quality including epistemic standards. If users could easily move between platforms while maintaining their social connections, platforms would face stronger incentives to maintain information environments that users value. The technical standards for interoperability would need careful design and the political will to impose such requirements on powerful companies remains uncertain.

Content liability frameworks could make platforms more accountable for particularly harmful content while preserving the broad protections that enable open online discourse. The current framework in the United States, Section 230 of the Communications Decency Act, provides strong protection from liability for user-generated content. Reform proposals range from narrow carve-outs for specific harms to comprehensive restructuring of platform liability. Any reform must navigate difficult tradeoffs between incentivizing content moderation and avoiding creating incentives for excessive censorship.

Public option platforms funded through taxes or fees could provide alternatives to commercial social media operating under different incentive structures. These platforms could optimize for public interest outcomes rather than profit maximization, potentially providing higher-quality information environments for users who choose them. The challenges include avoiding political capture, maintaining user appeal against well-resourced commercial competitors, and determining appropriate governance structures.

International coordination remains essential given the global nature of digital platforms, but achieving coordination proves exceptionally difficult given divergent values and interests across countries. Democratic nations might coordinate on shared standards while maintaining independence from authoritarian regimes seeking to export censorship and surveillance models. However, platform companies face pressure to harmonize policies globally, potentially leading to lowest-common-denominator standards that satisfy authoritarian demands.

The Long Reconstruction

Rebuilding functional epistemic infrastructure will require sustained effort over decades rather than quick fixes or silver-bullet solutions. The reconstruction must proceed on multiple fronts simultaneously, addressing technological, institutional, educational, and regulatory dimensions of the problem. Success remains uncertain and will depend partly on factors beyond anyone’s direct control including broader political and social developments.

The reconstruction also requires accepting certain limitations. Complete consensus about all factual questions is neither achievable nor desirable in pluralistic societies. Some degree of disagreement and multiple perspectives enriches public discourse and guards against stagnation. The goal is not eliminating disagreement but enabling productive disagreement grounded in sufficient shared reality that disputes can be resolved through evidence and argument rather than pure exercises of political power.

Similarly, perfect protection against misinformation and manipulation is impossible. Determined actors with sufficient resources can always find ways to circumvent safeguards and exploit vulnerabilities. The goal is raising the costs of manipulation and limiting its effectiveness to levels that preserve functional shared reality rather than eliminating deception entirely.

The reconstruction must also navigate tensions between short-term pressures and long-term needs. Electoral politics creates incentives for seeking immediate results and declaring victory prematurely. Genuine reconstruction requires patience, experimentation, failure tolerance, and willingness to invest resources in efforts whose payoffs remain distant and uncertain. Democratic systems struggle to maintain commitment to such long-term projects, but the alternative is continued epistemic degradation that ultimately undermines democracy itself.

Toward a New Epistemic Commons

The concept of epistemic infrastructure ultimately points toward something deeper than mechanisms and institutions: the need for a shared epistemic commons where citizens across diverse perspectives can meet to resolve factual disputes and build collective understanding. This commons must be constructed deliberately through institutional design, technological architecture, educational practice, and cultivated norms.

The commons must be genuinely public and accessible rather than privatized spaces controlled by commercial platforms optimizing for profit. It must welcome participation from diverse communities while maintaining standards that prevent degradation through manipulation and pollution. It must balance openness to challenge and revision with sufficient stability to enable coordination and planning. And it must prove resilient to the various pressures that destroyed previous attempts at constructing shared reality.

Building this commons requires recognizing that information is not merely a commodity to be produced and consumed through market mechanisms. Information has properties of a public good whose social value exceeds private returns, creating systematic underinvestment through purely commercial mechanisms. The epistemic commons requires public support and governance analogous to other public goods including physical infrastructure, public health, and environmental quality.

The reconstruction also demands reviving the idea that truth matters as more than personal preference or political weapon. Epistemic relativism, the claim that truth is merely what communities or individuals believe, proves corrosive to any possibility of shared reality. While acknowledging the complexity of knowledge production and the existence of multiple valid perspectives, a functional epistemic commons must rest on the premise that reality constrains belief and that claims can be wrong regardless of how many people believe them or how strongly.

The path forward will be difficult, contested, and uncertain. The forces that produced epistemic disaggregation remain powerful and continue to benefit actors who prefer that citizens remain confused and divided. The technological dynamics enabling rapid information circulation and algorithmic curation are not easily reversed or controlled. The social fragmentation and political polarization that both cause and result from epistemic disaggregation have momentum that will take decades to arrest and reverse.

Yet the alternative to reconstruction is continued degradation that will make increasingly difficult the coordination required for addressing collective challenges including climate change, technological disruption, and managing complex technological and social systems. A society that cannot agree on basic facts cannot deliberate effectively about responses to those facts. Democracy depends on the possibility of changing minds through evidence and persuasion rather than merely mobilizing predetermined coalitions in zero-sum conflicts.

We face a choice between investing the sustained effort required to rebuild epistemic infrastructure capable of supporting shared reality, or accepting progressive fragmentation into mutually incomprehensible communities united only by inhabiting the same physical territory and being subject to the same laws. The latter possibility is not impossible to imagine, but it would represent a fundamental transformation in what political community means and a loss of something essential to democratic self-governance. The work of reconstruction therefore represents more than a technical or institutional challenge. It embodies a commitment to the possibility of collective truth-seeking and the belief that such truth-seeking remains possible even in conditions of deep disagreement and technological upheaval. Whether that commitment can be sustained, and whether the necessary reconstruction can succeed, will determine much about what kind of society emerges from the current crisis.

Thanks for writing this, it clarifies a lot. I truely appreciate how you highlight that the 'infrastructure for collective sense-making has historically been so taken for granted until it began to fail.' It’s like we never notice the operating system until it crashes. How do we even begin to debug this societal system? Such a sharp and timely insight.

There has never been a single epistemological framework. Our subjective frame of reference is a kaleidoscope of options, typically swayed by where the individual 'sits' on the rationality-faith axis. But in this historical context we avoided chaos. Not any more. Universal 'truths' derived from a pretense of objectivity are collapsing one by one. Not necessarily the laws of physics, but certainly second-order artefacts - long-term weather models, neural networks, public heath policy - anything which can be hijacked by vested commercial interests, and consequently supercharged into political leverage. When facing criticism or calls for accountability, these special interests employ tropes such as "conspiracy theorists", but this pushback - like any well-worn antibiotic - is loosing efficacy. Consequently trust simply melts away. The will to restore the equilibrium between rationality and faith is too weak (because there are too few who see the crisis), and I'm too much of a pessimist to see a way back.